Why global app testing is the go-to partner for AI-powered digital quality

Users interact with products across diverse devices, browsers, networks, and local settings that internal teams cannot fully replicate, making early testing essential. Features that perform well in internal testing may still fail with real users after release.

As products launch faster and reach more devices and regions, maintaining consistent quality is increasingly difficult. Teams need confidence that updates work for real users under varying networks, devices, and local conditions.

In this blog, we explain how Global App Testing enables teams to deliver reliable, high-quality digital experiences through real-world and crowd testing.

What makes a global app testing a trusted QA partner?

Global App Testing helps teams navigate AI complexity by validating outputs, integration, and edge cases, giving confidence in both model reliability and user experience.

Our AI testing framework covers the key dimensions needed to deliver consistent, high-quality experiences, including how outputs perform, stability under real usage, and fairness across contexts:

- Validating AI outputs: We compare model responses with actual user inputs to find unusual cases, logic loops, or unexpected behavior before the release.

- Stability under real usage: Through AI testing with real users, we assess how your systems perform as inputs, traffic, and interaction patterns change across devices and regions.

- Bias and consistency checks: We identify inconsistent or biased outputs by testing responses across languages, regions, and demographic contexts. This allows teams to provide fair and inclusive experiences that work for all users.

Teams can leverage real-world testing to uncover issues that matter most to users and extend this approach to the complex, variable behavior of AI systems.

How do we help teams test AI and machine learning systems?

AI systems produce unpredictable outputs, which is why Global App Testing uses AI testing through real-user interactions rather than fixed test cases. Testing with real users captures AI behavior in diverse scenarios, ensuring reliable and equitable results. Below are the common areas that our teams test for AI-powered systems:

- Reliable chat interactions: Testing responses across different questions, languages, and ways people interact to ensure consistency.

- Validating system results: Making sure outputs match the expected goals and work as intended in practical scenarios.

- Fairness and equity checks: Spotting behaviors that could create bias or inconsistent experiences for users.

- Stress-testing unusual situations: Examining rare or unexpected inputs to uncover problems before they affect users.

Why real-world testing matters:

- Test across real devices and setups: Instead of relying only on simulations, seeing how people actually use features on different devices and browsers shows where things work and where they don’t.

- Catch unusual situations early: Real users sometimes do things that scripted tests never think of. Spotting these cases before launch prevents surprises.

- Find usability problems: Watching people interact with the app reveals workflow and interaction issues that internal testing alone often misses.

To see how real users experience the product, teams use crowdtesting. This goes beyond internal tests and uncovers problems that controlled environments might miss.

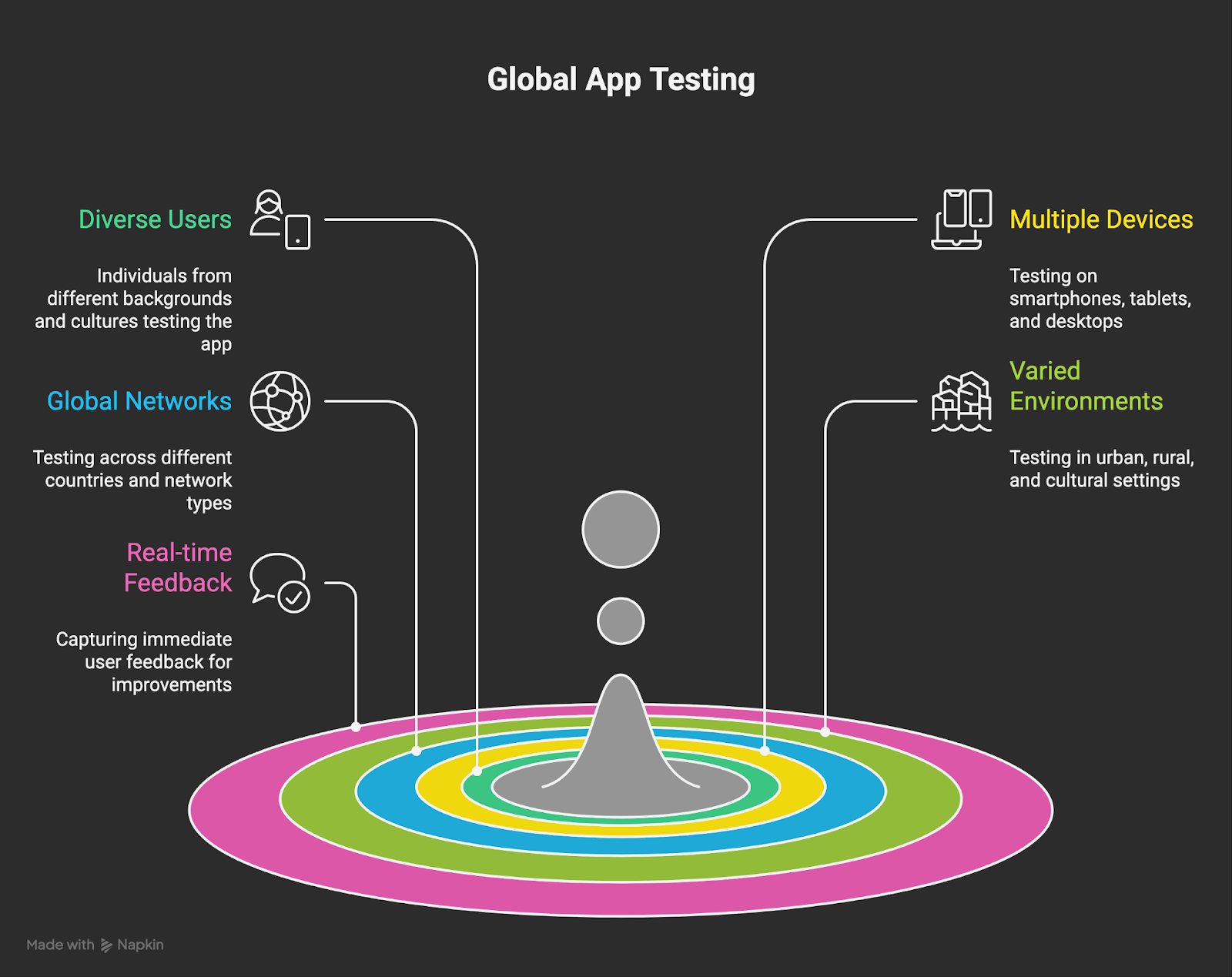

What is crowdtesting and why does it matter?

Crowdtesting is the practice of testing software by engaging a distributed group of real users to evaluate applications under real-world conditions. This approach goes beyond lab tests to reveal authentic user behaviors and uncover issues that controlled environments might miss.

Global crowdtesting in action

Teams at global app testing benefit from crowdtesting in the following way:

- Customer retention: We watch chatbots in real interactions, checking tone, responses, and how users actually experience them. This helps catch annoying spots before people leave.

- Global product reach: We validate intelligent features such as chatbots, recommendation engines, and automated workflows across languages, regions, and cultural contexts, helping products scale into new markets without localization issues.

- End-to-end coverage: GAT validates complete user journeys to ensure intelligent features work smoothly across core workflows, backend systems, and third-party integrations.

- Faster regression cycles: By testing continuously across frequent releases, we surface high-risk issues earlier, shortening regression cycles without reducing coverage.

- Consistent user experience: Feature behaviour is checked across devices, network conditions, and real usage patterns to deliver dependable performance at scale.

Crowdtesting often highlights critical usability and device issues that scripted tests miss. For instance, Carry1st used Global App Testing to evaluate checkout flows across regional devices and uncovered payment compatibility problems that were limiting conversions. After addressing these, the team saw a 12% increase in local checkout completion rates.

Crowdtesting actively demonstrates real-world product use, reflecting how users behave outside scripted tests.

What kind of results do our customers see?

For engineering leads, understanding how quality impacts the business is critical. Actionable insights and early issue detection provide clear visibility into system performance across today’s complex environments.

Key benefits for engineering leads include:

- Accelerated releases with AI confidence: Early real-user AI testing shortens regression cycles while preventing post-release defects.

- Consistent global experience: We validate responses and key functional flows such as logins, payments, and content recommendations across regions. This ensures a reliable experience that supports user retention and satisfaction.

- Actionable prioritization: Reports surface the most critical issues, enabling teams to focus their efforts on the areas with the greatest impact.

- Full-cycle QA coverage: Comprehensive end-to-end validation ensures systems operate reliably and minimizes operational risk.

- Customer Retention: Consistent quality of AI products helps retain existing customers and maintain business continuity.

For example, we at GAT worked with Facebook to significantly scale its testing footprint. Instead of manually testing a few thousand partner apps, the partnership expanded its QA coverage dramatically in the same timeframe, enabling faster defect discovery and greater release confidence.

Engineering leaders gain a clear view of user feedback and system performance, enabling them to tackle key issues, avoid post-launch problems, and deliver reliable, high-quality experiences across all platforms.

What does digital quality look like today?

To build high-quality digital products, testing must occur at every stage. Doing regular checks helps teams see whether things actually work on different devices and networks, keeps products usable, meets basic compliance needs, and avoids redoing work later.

The impact of embedding integration testing into everyday development work is:

- Full scenario coverage: Testing spans all devices, networks, and regional conditions, helping ensure consistent and reliable performance.

- Proactive issue detection: Teams identify potential problems before they reach users or affect operations.

- Streamlined team action: Findings are integrated into workflows, allowing teams to resolve issues quickly and efficiently.

- Guided release planning: Actionable insights inform decisions, supporting faster and higher-quality releases.

By integrating testing into the development workflow, we help teams deliver high-quality releases while keeping internal processes efficient.

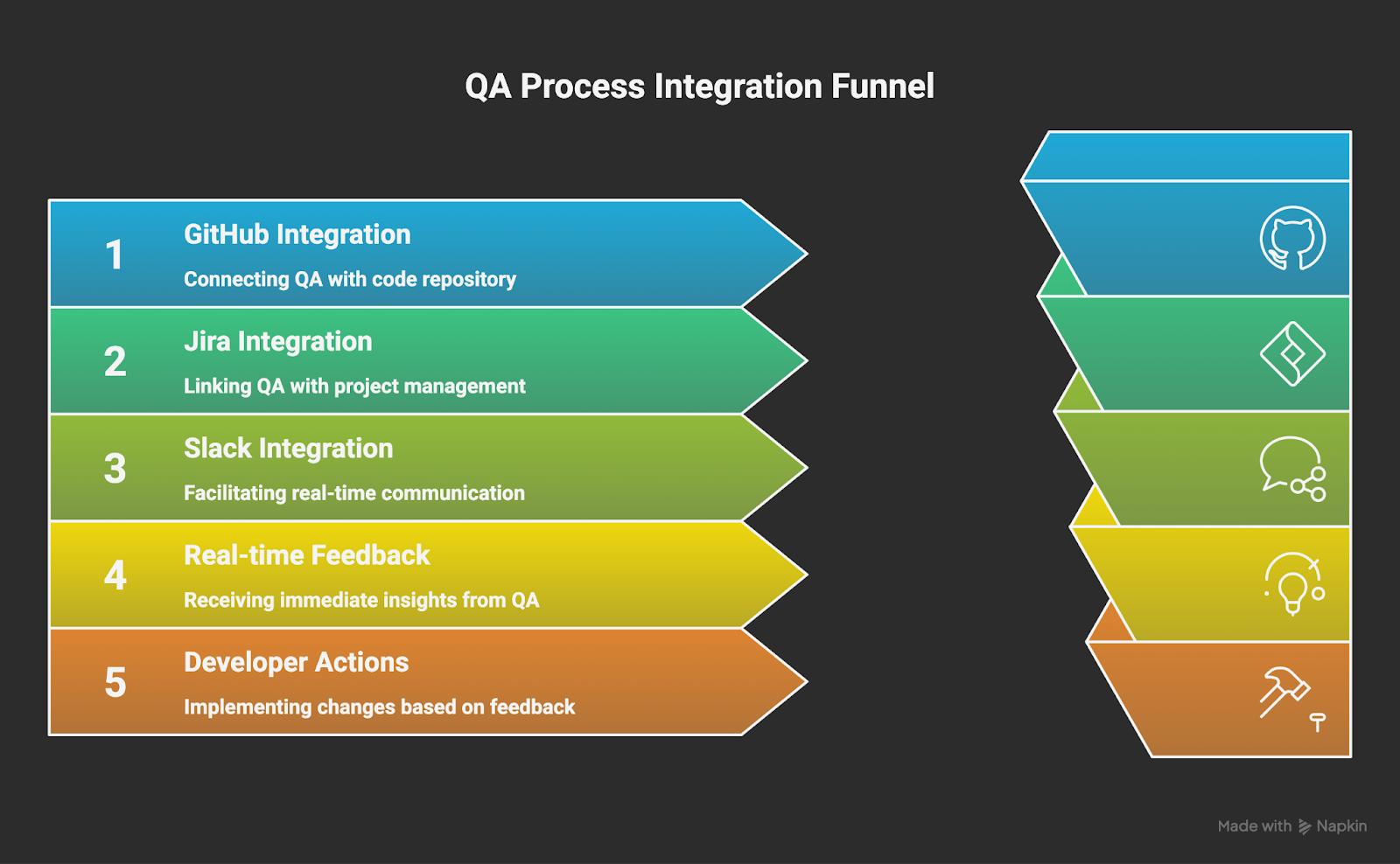

How do we fit into your workflow?

Global App Testing doesn’t act as a separate step. Instead, it works directly within development workflows, so teams see feedback when needed, fix issues right away, and keep releases moving without compromising quality.

Testing is integrated into the workflow.

How workflow-aligned testing supports delivery:

- Teams catch bugs and notice usability problems as they work, instead of waiting until later.

- Tools like GitHub, Jira, and Slack are part of daily work to help teams maintain momentum.

- Reports with screenshots and simple steps make it clear what needs fixing first.

- Sharing what’s learned across QA, product, and engineering keeps everyone coordinated.

- Spotting serious issues early helps prevent delays and makes the product more dependable.

When testing occurs as part of the workflow, we review features, logins, payment steps, and content interactions with real users. This approach helps catch issues early and maintain a consistent user experience.

What types of functional testing do we offer?

Global App Testing reviews the core workflows users rely on to confirm real-world performance. Coverage is focused on critical and high-priority areas to reduce defects and limit disruption for customers.

The following functional testing approaches ensure teams concentrate on the areas that have the greatest impact on users and business outcomes.

|

Functional Testing Type |

Purpose |

Key Benefits |

|

Risk-based testing |

Focuses on critical workflows, including login, payments, and integrations. |

Prevents high-impact defects in key user journeys. |

|

Tests across browsers and devices. |

Maintains consistent performance on all platforms. |

Ensures a smooth experience for every user. |

|

Release-aligned coverage |

Adapt testing to each release or feature update. |

Focuses on areas that most affect end-user experience. |

|

High-value feature validation |

Confirms core features such as onboarding, checkout, and search work as intended. |

Builds confidence that essential functionality is reliable. |

Focused testing provides early, actionable feedback, enabling teams to identify high-priority issues, address them before release, and maintain consistent quality throughout development.

Why is real-time feedback a game-changer?

Real-time feedback allows teams to address issues immediately, reducing rework. Detailed reports highlight problems, user impact, and recommended actions, keeping workflows efficient across devices.

Primary advantages are:

- Actionable insights: Help teams fix issues faster and work better across QA, product, and engineering.

- Early detection: Identifies high-risk problems to keep releases on schedule and avoid rework.

- Contextual feedback: Shows where issues occur, why they matter, and what to fix.

- Focused workflows: Concentrating on the parts users interact with ensures testing targets what really counts.

In the fast-paced conditions, immediate insights into problems ensure teams deliver reliable experiences across devices and workflows.

How do we help you build for everyone?

Building for everyone requires products that function across abilities, devices, and networks. GAT tests real users across diverse scenarios to uncover hidden issues and ensure accessibility, inclusivity, and compliance with the Web Content Accessibility Guidelines (WCAG).

Benefits of this inclusive testing approach include:

- Spotting accessibility gaps across devices, assistive tools, and network conditions.

- Improving usability for all users, including those with disabilities or slower connections.

- Practical insights to guide design and development decisions.

- Helping meet accessibility standards and comply with regulations.

- Delivering products that perform reliably for all builds strong user confidence.

With these benefits, teams can confidently deliver products that perform reliably, are accessible to all users, and provide a seamless experience across devices and networks.

Why do leading brands choose Global app testing?

With global coverage, fast feedback, and practical testing insights, leading brands can grow QA efforts without overloading in-house teams. Teams deliver each release of AI features or multi-region updates to meet high standards, drive rapid development, and support confident decision-making.

Primary reasons brands rely on Global App Testing include the ability to:

- Validate product behavior across devices and regions to prevent market-specific issues.

- Receive timely feedback that enables teams to fix problems before users encounter them.

- Offload routine testing from internal teams, freeing up time for development and innovation.

- Support for complex product launches, including integrations and frequent releases.

- Build confidence in delivering reliable user experiences, protecting brand reputation, and reducing post-release issues.

Leading teams use Global App Testing to maintain quality across regions and devices. For example, Canva expanded QA coverage as its product scaled globally. Testing across different markets revealed localization and performance issues early, allowing for smoother growth and a user experience that feels native in every region.

With GAT as a testing partner, teams get products out faster, face fewer issues, and deliver a more seamless user experience.

Key takeaways

Teams are required to identify problems before users notice them, reducing the need for urgent, costly fixes. Global App Testing reveals where workflows break and shows actual user impact. Immediate feedback enables fast action and ensures consistent app performance.

Take the next step in digital quality: explore how Global App Testing can help your team deliver reliable, high-quality, and inclusive experiences across devices, regions, and real-world scenarios.

Ship reliable releases with real-world regression testing from

Global App Testing