5 Best Practices to Accelerate Code Review

I ❤️ doing code reviews. Really! Reviewing code allows me to stay up-to-date with the system state, learn from others, ensure quality and share my knowledge and experience. Recently, my team has grown and some less experienced engineers have joined. It turned out they found my comments in code reviews a great way to learn, but on the other hand, a bit overwhelming and blocking. Sometimes, their pull requests were hanging in code reviews unnecessarily and frustratingly long. I would like to share some practices that I started experimenting with that have helped my teammates merge pull requests quicker, reduce frustration and upskill along the way.

The story

Before my team grew, I predominantly worked with very experienced engineers that I’d known for years. I didn't have to think about how they would react to my reviews - they somehow knew intuitively which comments they were supposed to apply, which were "nice-to-haves," where we needed a further discussion and what the overall review result was. Our system worked magically and effortlessly.

After younger, eager to learn developers joined, it turned out that they tended to apply all of the suggested changes. When I asked for feedback about my code review, one of them said: "When I see a suggestion from a much more experienced dev, I assume that I should apply it." That was a game changer for me. That moment I recalled the last Pull Request where I left ~50 comments and just imagined how frustrating it must have been for my new teammate. OMG.

After discussing the topic, here are the best practices that we came up with:

#1: Traffic lights

I categorized code review comments by the importance and actions that I would expect to be taken, resulting in three categories:

-

Blockers - things that block me from approving a Pull Request (e.g. bad design, rule break, code smell, security issue, missing spec)

-

Warnings - things that worry me or I don't understand (e.g. I don't get the context, need to make sure that something was checked)

-

Improvements - mostly small refactorings, cleanups, syntax sugars (many people, especially from the open-source community, would call them "nits")

It reminded me of traffic lights, so I assigned colors to them: Blockers, Warnings and Improvements.

I paired with my teammate again and explained actions that I would expect in response to a certain type of comment:

-

Blockers - we need to change this,

-

Warnings - let's check and discuss and the discussion should resolve into Blocker or Improvement (or just no action when everything is fine),

-

Improvements - we can skip or apply later.

While for blockers and improvements it is quite clear and binary, reactions to warnings can vary case-by-case. For example, when you ask a question, the next steps depend on the answer.

Pro-tip: If there are limited possible answers to your question, you can proactively share your expectations for each of them.

Example: Is it A or B? If A then we need to use XYZ (blocker), otherwise we can stick to your solution (no action).

We just reduced one feedback loop. Yey!

Warnings could also be calls to discuss something with a ticket/story owner (e.g. product manager or designer). Then we need to wait for the decision that would resolve to something else.

It's hard to list all cases here, but I hope that the general idea is quite clear.

When I explained this to my new colleague, he said: "Hey, this is exactly what I need from you!"

Then I realized that it could also be helpful for my more experienced colleagues. Now they can see what I expect, they would most likely not remain silent on something that I see as a blocker or might skip something with a good conscience.

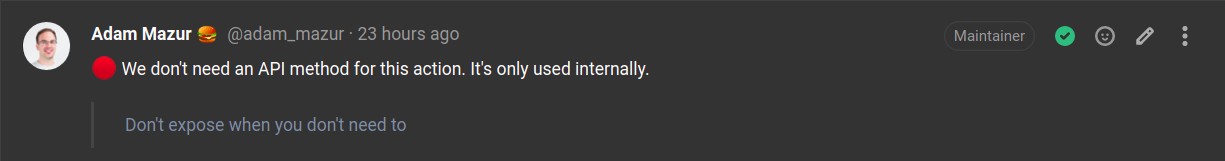

Here are some examples:

How does it speed up the code review feedback loop? As a code author:

-

I don't have to think about what the reviewer expects

-

I don't have to apply all suggestions

-

I can start resolving comments from the most important (blocking) ones

Low effort, high impact.

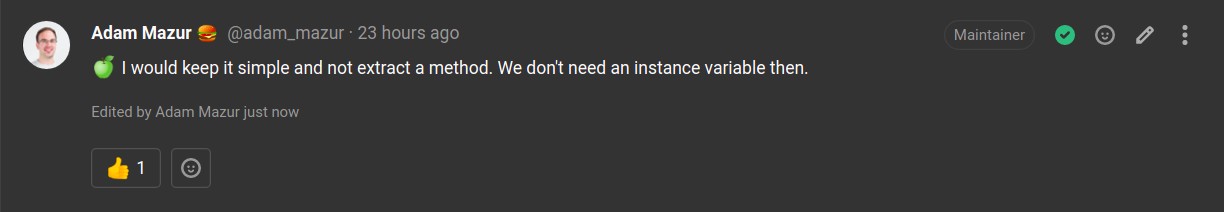

#2: Review summary

In the feedback that I received from my teammate, he shared how useful my review summaries have been. This is something that I was doing from time to time, especially when leaving quite a lot of comments. I don't have any strict template for such summary, but I try to always include:

-

What is my overall perception of the solution?

-

What are my expectations for next steps?

I usually use the former for praising, but when I really don't like the solution or the author missed the point - here is the best place to give that feedback.

In my expectations I try to express what needs to be done in order to move us closer to approval.

Such a summary read after all comments/suggestions should give the author a clear image of what I think about the solution and what should be the next steps. Of course, this is not something that I leave in every review. From my experience, it's mostly useful for bigger pull requests, when leaving many comments.

#3: "I trust you" approval

This is a dead simple practice: Approve pull request when the only suggestions you left were “Improvements” In other words, when all warnings are resolved and there are no blockers, there is nothing stopping me from giving an approval.

If I waited for all the "Improvements" to be implemented, I would be adding an additional feedback loop.

I usually don't get back to the pull request after approval and don't check which suggestions were applied. I just trust my teammates that they will consider effort vs. impact and make the right choice. Even if not - these are just improvements that can be done later.

Before you start practicing this, discuss this with your team to avoid confusion when they receive 10 comments and an approval. Depending on your team and project, you may also consider setting explicit expectations. Example:

When you skip an improvement comment, always create a ticket and put it in the backlog with a

improvementlabel

This removes another review iteration.

#4: Quick call?

Async long discussions in Pull Request comments can take days. Sometimes when you see that after 10 comments in a thread or three days of ping-pong (when usually it takes two days to merge) you are still far from an agreement or are talking about two completely different things, it can be quicker to jump on a call, share your screen and resolve it that way. Just don't forget to leave a call summary in a thread.

At Global App Testing, we use Slack huddles for such cases. I wish we could have "GitLab huddles" that brings all the discussion participants in one audio room with just one click in the thread. If you know anyone at GitLab, please send them this article.

That could save even more iterations. Oh, if only I would do this always...

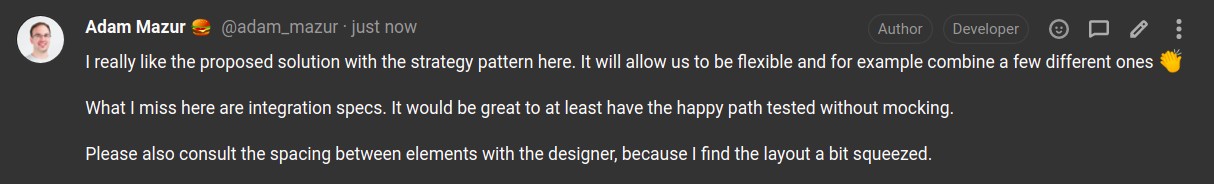

#5: Manual nudges

We tried some plugins and integrations that were automatically pinging us with pending Pull Requests waiting for reviews. While it was working for a while, pings quickly became ignored. :(

We decided on having a dedicated Slack channel where we started posting manual requests for reviews; it continues to work surprisingly well and has sparked a lot of creativity on how to incentivize people to check pull requests. Just look at these:

We use the same channel to ask about the next iteration after we apply all the fixes (which is harder to automate).

I observed that it's easier to ignore robots than people (especially when you see the effort they put into creating a meme). This reduced time to first reaction after making pull request ready for (re)review.

BONUS

If you're still reading (which I'm hoping you are), I will leave you with two more practices.

When you have a debate about different solutions or approaches, it's good to clearly communicate how strong your opinion is and be clear about who you expect to make the final decision.

If you state that you have a strong opinion, the author of the Pull Request will know that they either have to provide a strong argument or ask a third person to step in. It's less likely that they will skip your opinion. As a PR author, I sometimes ask the reviewer how strong their opinion is and find that it usually helps resolve the discussion. I imagine this is because such a question causes a reflection and cools down emotions.

When it comes to making the final decision, it depends on your role. If you are a tech lead or a code owner, the decision can be yours by default, but you can always explicitly delegate it to the PR author (when you think that a bad decision won't be that harmful). When the code author is your peer and you can't agree, it's always a good idea to ask a third person - ideally someone decisive.

Summary

The number of feedback loops is especially important in a remote/async environment, where as the author of the code, you must sometimes wait hours for the next review iteration. Four iterations might mean four days (maybe an extreme, but just imagine a remote cross-timezone environment). (In the office you can probably just sit together and go through the code - applying changes on the fly.)

I’ve shared a few practices that can help you significantly reduce the number of feedback loops and shorten them, so you can improve your delivery time. This article can be summarized by four main points:

-

Share overall perception

-

Be explicit about your expectations

-

Trust others

-

Adjust communication channels

By implementing these practices, you can shave off one iteration, which could sometimes save a whole day!

I'm curious to hear about your practices related to the code review process.