Effort vs. Reward – Scaling QA in a Start-up

As consumers expect, and demand, more from their online experiences, the pressure is on QA teams to deliver software at speed, without compromising on quality.

Building a QA team and process that therefore delivers the right balance of effort put in, versus the reward gained is paramount to achieving QA success.

Mo Taylor, Chief Product Officer of audio advertising platform, A Million Ads, shares his story on how he achieved this as the company transitioned from start-up to scale-up at the 2022 Software Testing Industry Forum.

How has Global App Testing played a key part in their journey? Watch the recording, or read our summary, below.

Fancy taking our platform for a spin? Access free, unlimited testing, for two whole weeks - no credit card required.

So, who are A Million Ads?

A Million Ads are a six-year old scale-up who offer dynamic audio advertising. They enable brands to serve highly targeted ads to their audiences, using factors such as weather, time of day and location to create a personalised, more relevant and memorable ads that delivers high ROI.

Their impressive suite of clients includes brands such as Disney, Tesco, Deliveroo, Coca Cola and Sky, to name a few.

In this article, find out how the company went from a weekly deployment schedule of this:

Source: A Million Ads, 2022

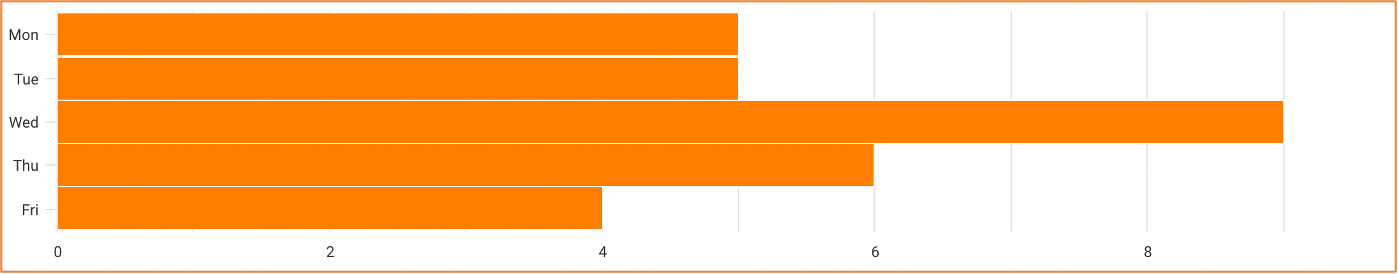

To this:

Source: A Million Ads, 2022

QA: The Early Days

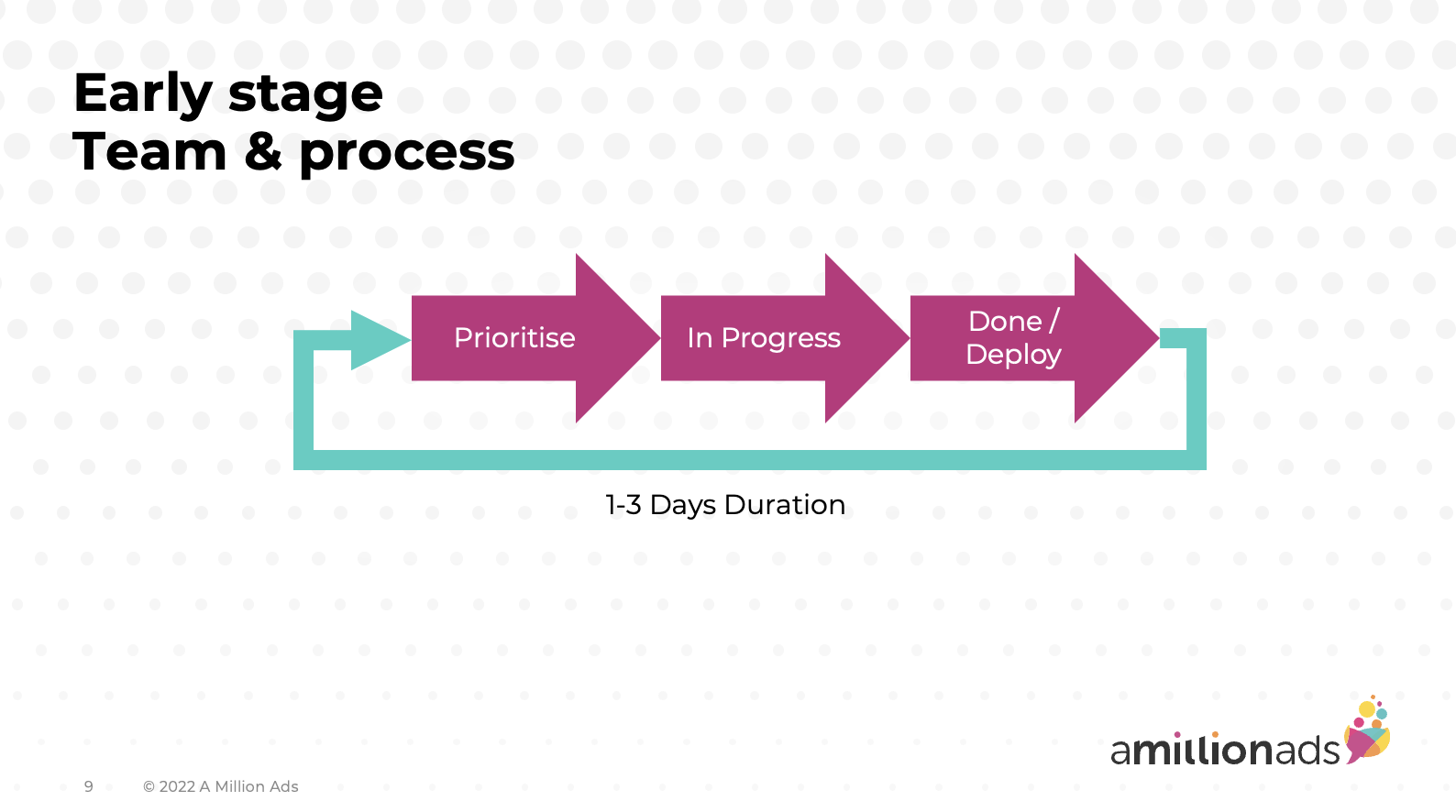

In the beginning, the QA process for A Million Ads was minimal and certainly not a key area of focus for the business. The concentration was very much on delivering a product, which meant the development team had to have a very agile process from the start.

However, when it came to testing the software, there was no internal QA team. It was the engineering team, creative team and sales team that all played a role in testing.

As they started to scale-up and gain investment, the product became more complex and their current approach started to show signs of wear and tear.

Scaling up

With an office location in central London, building a QA team internally was expensive (in the pre-pandemic days when more teams went into the office). Whilst wanting to save on costs, but keep an agile process and release velocity, they looked for an external partner to assist them with their software testing.

However, this came with its own challenges. The chosen partner would lack the in-depth product knowledge of an internal team. So, they opted for a hybrid model that would offer the perfect blend of internal resources, who could be the product experts, and an external test partner. This is when we, Global App Testing (GAT) came into the picture back in 2019.

The in-house tester would work with their engineering teams, and then work with GAT to give the business scale and speed when it came to their release cycles.

"That engagement with GAT started with them helping us write some test cases, so they got us off the ground immediately. And then we hired our internal resource and we started elaborating on all of those lovely test cases. So, we got to a really high-level of test case coverage pretty quickly. "

Mo Taylor, Chief Product Officer, A Million Ads

Growth pains: automation vs manual testing

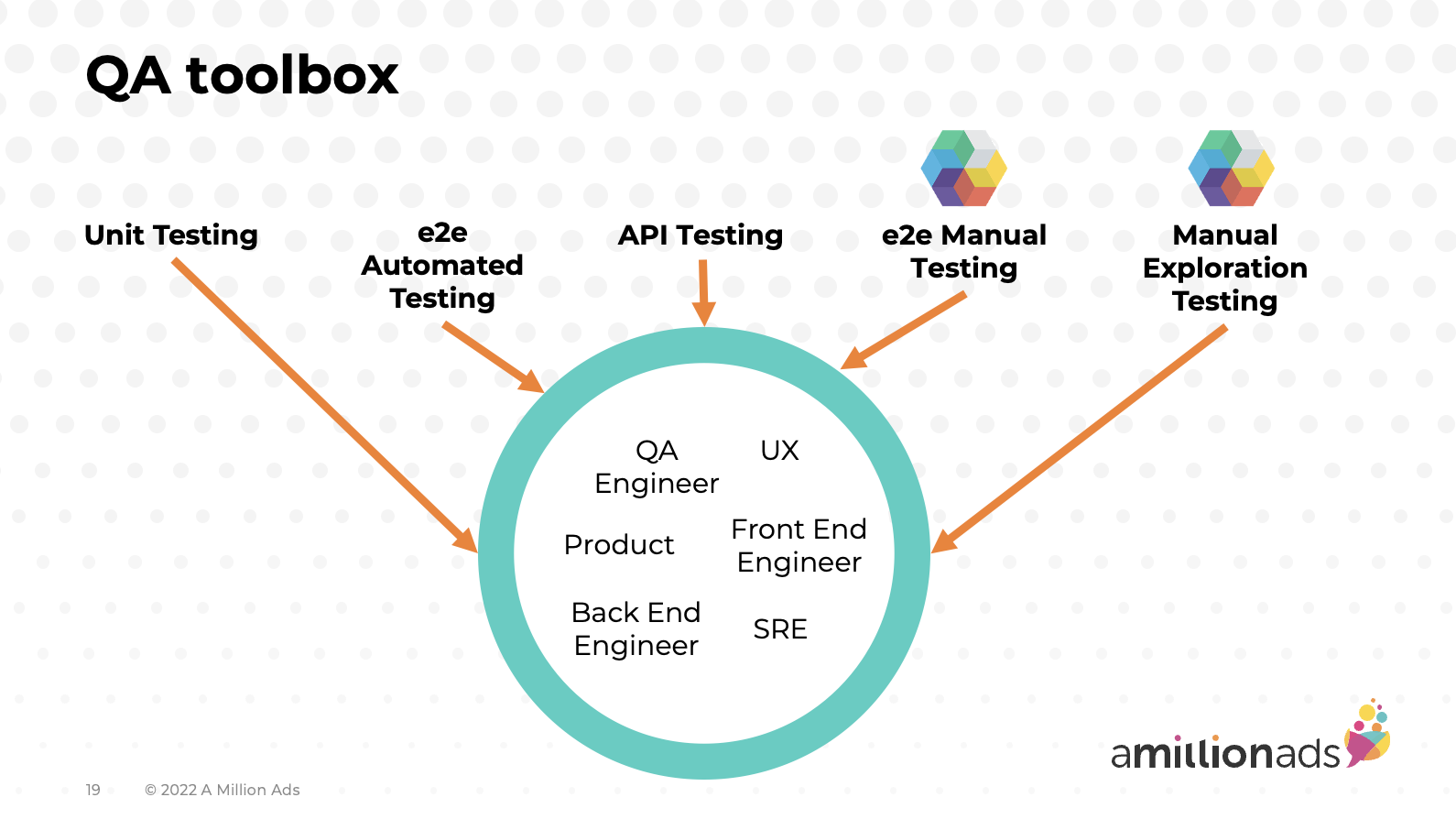

Whilst the QA process was starting to gain momentum, the AMA team started investing in API testing, unit testing and end-to-end (E2E) automation testing - with the actual aim of reducing manual testing and keeping this to low-levels of beta feature testing when required.

But it was this approach that actually started to have a negative impact on their Software Development Life Cycle (SDLC). The amount of effort being put into their E2E testing wasn't actually seeing any rewards, or adding any value. It became expensive for the business to start building automation tests as they required a lot of work to maintain on a complex user interface.

The manual testing that GAT was involved in however, was giving far more value than the E2E automation tests.

Further challenges were also appearing from the internal QA team, who were beginning to feel isolated from the development team - being seen as an after-thought, rather than being at the centre of the process.

Finding the right schedule with GAT

The effort A Million Ads were putting into their manual testing was low, with GAT taking this burden off their shoulders. Tests would be pushed over to our community of testers at GAT by the end of the day each Friday, so that by Monday morning the development team could hit the ground running in triaging any bugs that came back..

However, there were flaws to this strategy. By the time it came to fixing bugs, the engineers had moved on to focus on something else. They had swapped their code editor that week for a different part of the codebase. The bugs being found were starting to become an ‘interrupt’ as developers had to down tools on new projects a to fix bugs from the previous week.

What's more, by operating in this way, quality and testability were not at the forefront of the engineers' minds, and they were losing touch with the end-users of the product.

Team re-architecture and the perfect QA Toolbox

To improve internal processes, A Million Ads brought their QA team into 'the gang'. This meant they had a much more strategic role right from the start of a new project - offering insight into how testing plays a part in the release cycle. It also means they have influence in how code is being built, how it is being released and when it can be released.

With this new structure came a need for a more robust QA toolbox that could support the different types of testing required for different features. For example, one feature may require a high level of unit testing; or a prototype may just need some quick user-feedback.

"No longer were we trying to knock down the number of manual test cases that we were putting over to GAT at the weekend but actually think: "That's really good testing, we're getting really high value back from that level of testing. What can we do to improve the process for our engineers?"

Mo Taylor, CPO, A Million Ads

Getting immediate user feedback

Despite these changes, A Million Ads found that the automated E2E tests they were running overnight, were, in actual fact, slowing them down. In order to get more immediate feedback, they spent time bringing these tests into their CI/CD pipeline so tests could be run as a developer is doing a commit or a pull request.

They then took advantage of the Global App Testing SaaS platform to get immediate end-user feedback by running exploratory tests on any new features. This proved invaluable as they can get early feedback within a couple of hours, using the GAT testing community (or their 'beta users' as Mo's team thinks of them), which act as an extension to their internal team.

They can also run some black and white testing at the same time, so that the internal QA team can very quickly assess what's valuable from the results, and the developers can very quickly act on any immediate feedback.

What are Mo's Top Tips?

- Think about your QA process from the offset

- Seek external help from a partner, such as GAT, to scale up and gain skills

- Always think about the effort you are putting in vs the reward you get out

- Make sure QA isn't an afterthought.

To test faster and more efficiently, give our platform a spin around the block for two weeks on us.

Future plans with GAT

The team would like to move away from weekend manual testing have an always-on testing process that completes partial runs of their test case document throughout the week. GAT are working with them to do just that.