What Is Performance Testing and How Does It Work?

Picture this: you have built an application that is packed with exciting features. However, during the launch, the unexpected happens - your application simply can't handle the user load, and the system crashes. This is why utilizing performance testing before you release an app is one of the key steps in the whole process. It provides valuable insights into how well your application will perform under different user loads. In this article, we will explore performance testing, why it is important, and how you can get started. Let’s begin!

We can help you drive performance testing as a key initiative aligned to your business goals

What is performance testing?

Performance testing is a type of software testing that checks a system's performance under different workloads. It measures system response times, resource usage, throughput, and other metrics to identify obstructions or performance issues. Performance testing can be done at different stages of the software development life cycle.

What are performance testing types?

There are various types of performance testing, each serving a different purpose and producing different results. For example:

- Load testing: Measures a system's ability to handle increasing levels of user or system activity.

- Stress testing: Tests the system beyond its expected or peak load to find failure points or capacity limitations.

- Soak testing: Tests the system under a continuous load for an extended period to identify performance degradation or memory leaks.

- Spike testing: Measures how well the system responds to sudden spikes in traffic or activity.

- Volume testing: Tests the system's ability to manage large volumes of data or transactions.

- Endurance testing: Checks how well the system performs over a prolonged period of time.

- Compatibility testing: Tests the system's compatibility with different operating systems, browsers, devices, or platforms.

- Regression testing: Tests whether a system still performs as expected after changes or updates have been made.

- Scalability testing: Measures how well the system can handle increased workload and system demands.

- Resilience testing: Tests the system's ability to recover from failure or disruption.

- Functional testing: Tests whether the system meets the functional requirements and works as intended.

- Reliability testing: Tests the system's ability to deliver consistent results over time.

Why is performance testing important?

Performance testing guarantees the quality, reliability, and scalability of software applications. Measuring the system's performance and stress levels ensures that the application can handle the required load without errors or downtime and meet users' needs. Additionally, performance testing detects and fixes performance issues early in the development cycle, saving time and money further in the release cycle.

Performance testing advantages

Here are a few advantages of performance testing:

- Early identification and mitigation of potential performance issues.

- Reduced system downtime, resulting in increased system availability and reliability.

- Improved user experience due to faster system response times and effective load management.

Performance testing disadvantages

Here are some potential disadvantages of performance testing:

- High costs associated with setting up performance testing environments and tools.

- Difficulty in simulating real-world scenarios and user behavior, leading to inaccurate results in some cases.

- Takes time and resources to design, develop, and execute performance testing scripts and scenarios, leading to longer development cycles.

When is the right time for performance testing?

Performance testing should be conducted at various stages of the software development life cycle, including the design, development, and deployment phases. Ideally, it should start as early as possible in the development cycle and continue throughout the software delivery pipeline. This ensures that potential performance issues are identified and addressed as early as possible. Performance testing can also be conducted during user acceptance testing or before releasing the software into production.

Performance testing metrics

Performance testing metrics are key to measuring and analyzing the performance of software applications. Let’s take a closer look at some of the metrics commonly used in performance testing:

- Load time: Amount of time it takes for a web page or application to load in a user's browser.

- Response time: Measures how long it takes for a system to respond to a user request.

- Error rate: Indicates the percentage of failed transactions compared to the total transactions processed, highlighting system stability and reliability.

- Scalability: Measures how well a system can handle an increasing amount of users or workload.

Below, you can find additional metrics that can impact the performance of the product.

Additional performance testing metrics

Here are some additional metrics:

- Throughput: Amount of data sent over the network, calculated in transactions per second.

- Amount of connection pooling: Measures how many connections are used by the application's connection pool.

- Committed memory: Amount of memory the application has committed to using.

- Memory pages per second: Rate at which memory pages are being allocated.

- Page faults per second: The rate at which the application retrieves memory pages from the hard disk.

- CPU interrupts per second: Rate at which the CPU is handling interrupts.

- Disk queue length: Number of disk requests queued and waiting to be processed.

- Network output queue length: Number of network requests waiting in the output queue.

- Network bytes total per second: Rate at which data is transmitted over the network.

- Private bytes: Amount of memory used by the application that cannot be paged to the disk.

- Maximum active sessions: Maximum number of active sessions allowed by the application.

- Hit ratio: Percentage of times that the application can find requested data in the cache.

- Hits per second: Rate at which the application can find requested data in the cache.

- Rollback segment: Amount of data that needs to be rolled back when a transaction is rolled back.

- Database locks: Number of database locks required by the application.

- Top waits: Amount of time the application spends waiting on specific events or requests.

- Thread counts: Number of threads being used by the application.

- Garbage collection: Amount of memory being freed by garbage collection.

How does performance testing work?

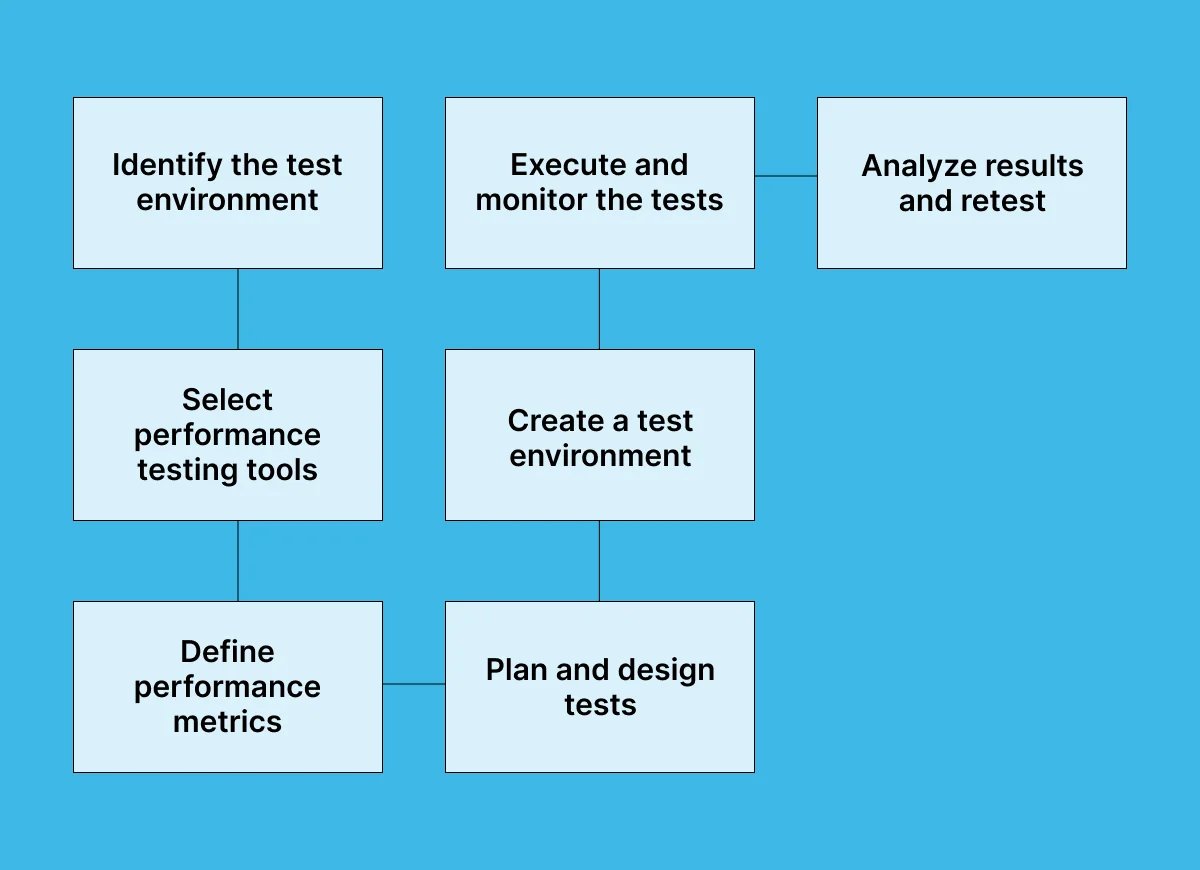

The steps for performance testing depend on the application's type, size, complexity, and objectives. However, the overall goal of performance testing remains consistent: to deliver a high-performing, reliable, and scalable product. To achieve this goal, most performance testing plans generally follow a workflow consisting of planning, designing, executing, and analyzing various performance test scenarios. Let’s explain this in more detail.

1. Identify the test environment

Depending on the application, this can be the actual user environment or a simulated environment that replicates the user environment. This involves considering various factors such as hardware specifications, network configurations, software versions, and user demographics. The end goal of this step is to ensure that the tests are carried out in an environment that closely resembles the end-user conditions.

2. Select performance testing tools

Select the appropriate tools and software that best fit the application's performance testing needs. The selection can vary depending on the system's complexity, the business requirements, or the testing team's preferences. This step ensures that the chosen tools align with the characteristics and demands of the application under evaluation. Additionally, you should factor in scalability, compatibility, ease of integration, and reporting capabilities for the selected tool.

3. Define performance metrics

Set clear performance targets, objectives, and metrics to measure the system's performance against expected criteria. By defining these parameters, testing teams can align their efforts with the expected criteria, which include response time, throughput, resource utilization, error rate, and other relevant metrics specific to the system.

4. Plan and design tests

Create a plan for different types of performance tests, such as load testing, stress testing, and volume testing. The plan should be designed to ensure that the system is tested under realistic scenarios and loads that match the demands of the expected users. Additionally, the plan should include backup measures to handle unexpected issues that might occur during testing.

5. Create a test environment

Set up a test environment that best fits the application and performance testing tools being used. This may include configuring servers, networks, databases, and other related components, as well as ensuring the test environment adequately replicates the actual user environment.

6. Execute and monitor the tests

Execute the performance tests based on the test plan and monitor the system's performance closely. All performance indicators and metrics should be documented and recorded during the tests. This will allow for the appropriate analysis and reporting of the test results.

7. Analyze results and retest

Analyze performance test results to identify the cause of any performance issues or system failures. Based on the results, the test plan and system can be adjusted, and the tests can be re-run. This step is repeated until satisfactory results are achieved and the application can be released.

Conclusion

In conclusion, performance testing is the shield that protects applications from the chaos that could happen when users flood the system. It addresses questions like "Will the application hold up under pressure?" and "Can it handle the expected workload and still remain stable?". Performance testing gives you the confidence to release your application to the world, knowing that it will withstand the expected user demand.

How can Global App Testing assist with performance testing?

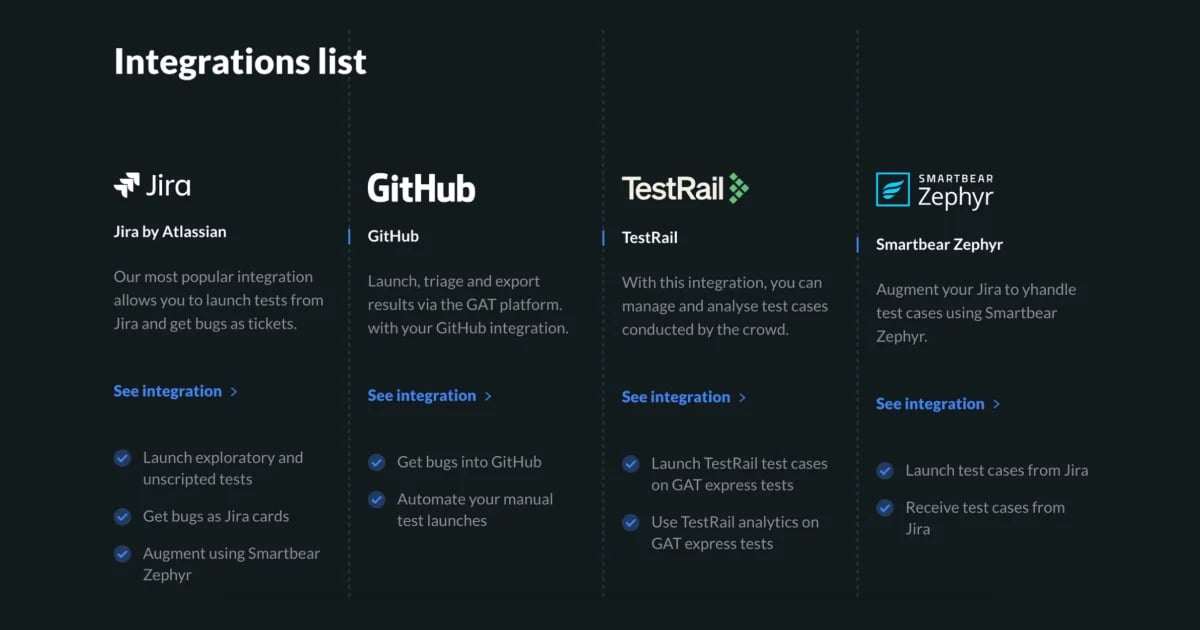

Global App Testing offers performance testing by providing a network of skilled testers and wide device coverage. Our simple-to-use platform identifies and resolves app bugs, delivering insights and reports that streamline the testing process. Additionally, we offer unique features that add even more value to performance testing:

Streamlined and unified platform:

- Our user-friendly platform integrates with your development workflow, enhancing efficiency.

- Effortlessly oversee testing projects, monitor advancements, and engage with testers via a centralized dashboard, promoting teamwork.

- Access detailed insights into each discovered bug's context and receive visual evidence for improved issue comprehension.

Fast and dependable service:

- Our 24/7 service ensures timely delivery of test results, ranging from 6 to 48 hours, accommodating urgent testing needs, including overnight launches.

Worldwide user feedback:

- Tap into a network of more than 90,000 testers spanning 190+ countries, providing diverse insights and comprehensive testing across the globe.

Quality assurance:

- To guarantee product quality and reliability, we utilize a range of testing methodologies, such as functional, compatibility, usability, and performance testing.

Extra support:

- You can access a plethora of guides, webinars, and articles to aid in exploring and adopting performance testing best practices.

Ready to learn more? Take the next step and sign up now to schedule a brief call today!

We can help you drive performance testing as a key initiative aligned to your business goals

FAQ

What is the difference between performance testing vs. performance engineering?

Performance testing focuses on evaluating system performance through testing, while performance engineering involves designing and optimizing systems for better performance throughout the development lifecycle.

What are the best practices for conducting performance testing?

Best practices for performance testing include defining clear objectives, using realistic test scenarios, monitoring system resources during tests, analyzing results comprehensively, and implementing iterative improvements.

How can performance testing help improve business outcomes?

Performance testing helps enhance user satisfaction, reduce downtime, mitigate risks, improve brand reputation, and ultimately drive business success by uncovering and addressing performance issues early in the development process.

Keep learning

How to perform manual mobile testing

QA vs. Software testing - What's the difference?

How to write a test strategy document for your testing